Mastering Azure Data Factory for Seamless Data Integration

- Ratheesh Kumar

- Sep 24, 2024

- 4 min read

Updated: Oct 16, 2024

In the world of modern data management, businesses are inundated with data coming from multiple sources, both cloud and on-premises. This data, crucial for decision-making and strategic planning, needs to be moved, transformed, and integrated seamlessly. This is where Azure Data Factory (ADF) steps in. As Microsoft’s cloud-based ETL (Extract, Transform, Load) service, Azure Data Factory offers a simple and efficient way to manage data movement and transformation across multiple sources. In this blog, we will explore what Azure Data Factory is, its features, and how you can leverage it for your data integration needs.

What is Azure Data Factory?

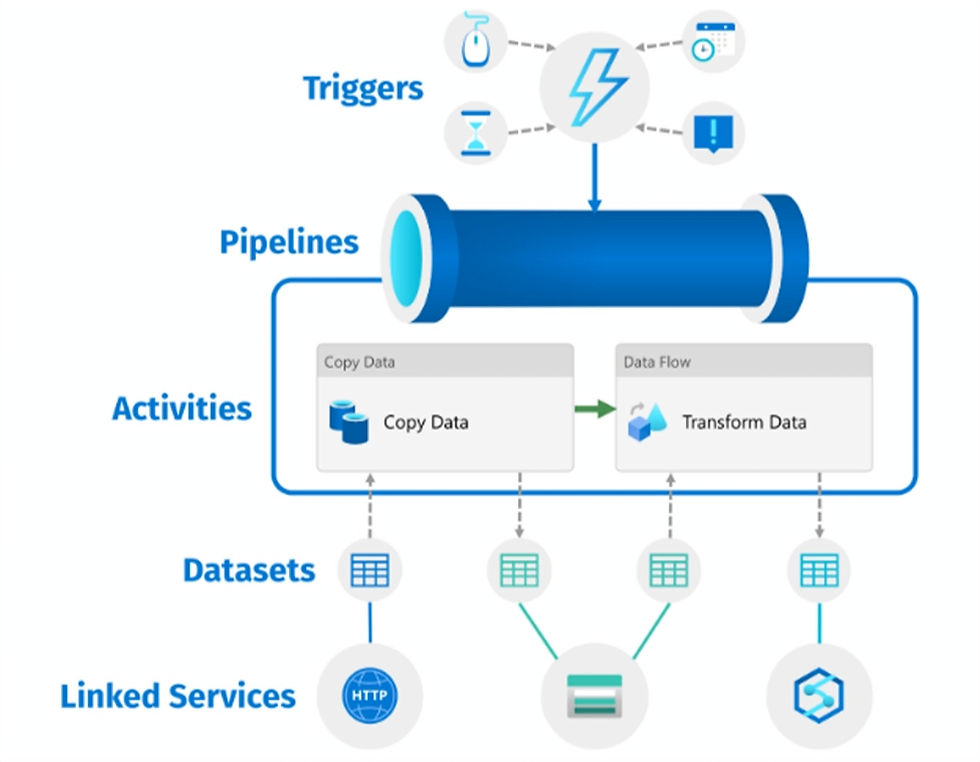

Azure Data Factory is a fully managed cloud-based service for ETL processes, which enables data engineers to move data from one source to another and transform it on the way. It simplifies the creation, scheduling, and orchestration of data pipelines, which automate the movement of data between different data stores.

With Azure Data Factory, you can:

- Ingest data from diverse sources like SQL databases, APIs, cloud platforms, and on-premise servers.

- Transform data through various activities like data filtering, joining, and aggregation.

- Load processed data into your destination storage for analytics, reporting, or further transformations.

Key Features of Azure Data Factory

1. Data Pipelines for Seamless ETL

Azure Data Factory allows you to create data pipelines that define data flows between your sources and destinations. These pipelines support a variety of activities, such as copying data, executing SQL queries, or performing complex transformations. With ADF, you can design end-to-end data movement processes without writing complex scripts.

2. Integration with Cloud and On-Premises Data Sources

One of ADF’s strengths is its ability to connect with a wide range of data sources, both cloud-based (e.g., Azure Blob Storage, Amazon S3, etc.) and on-premises (e.g., SQL Server, Oracle). ADF can also handle hybrid scenarios where some of your data resides on-premises and some in the cloud, all while maintaining secure connectivity.

3. Code-Free Data Transformation

While some data integration tools require extensive coding, ADF provides a code-free interface for many common data transformations. Using the visual designer, you can build complex workflows by dragging and dropping pre-configured activities.

4. Monitoring and Alerts

Azure Data Factory includes a robust monitoring dashboard, which allows you to track the performance of your pipelines in real time. You can set up alerts to notify your team of any issues like failed pipelines or resource limitations, ensuring your data workflows run smoothly.

Getting Started with Azure Data Factory

Step-by-Step Guide to Creating Your First Pipeline

Getting started with Azure Data Factory is straightforward. To create your first pipeline

Step 1: Navigate to the Azure Portal and search for Azure Data Factory.

Step 2: Create a new Data Factory resource, specifying your subscription and resource group.

Step 3 After provisioning, use the Data Factory UI to define your data sources, transformation activities, and destination storage.

Step 4: Trigger the pipeline to execute the ETL process and monitor its status from the dashboard.

2. Connecting to Data Sources

Azure Data Factory can connect to over 90 data sources, including Azure SQL Database, Blob Storage, Cosmos DB, Amazon S3, MySQL, and more. ADF uses Linked Services to define connections to these external resources. When creating a pipeline, you can link your data sources directly or use custom connectors for non-standard sources.

3. Data Transformation Activities

With ADF, you can perform various data transformations such as:

Filtering records to extract specific data.

joining datasets from multiple sources.

Aggregating data for reporting or further analysis.

These transformations can be designed visually, allowing even non-programmers to create powerful ETL workflows.

Real-World Use Cases for Azure Data Factory

1. Data Migration

Many organizations use ADF for data migration between legacy systems and cloud platforms. For example, a business could migrate its on-premise SQL database to Azure SQL Database using a simple ADF pipeline, enabling cloud-based analytics and scalability.

2. Building Data Lakes

Another popular use case is the creation of data lakes. Azure Data Factory allows businesses to aggregate and store large volumes of structured and unstructured data from multiple sources into a centralized Azure Data Lake, where it can be used for advanced analytics and machine learning.

3. Automating Data Workflows

Many organizations rely on ADF to automate recurring data workflows. For example, you could schedule a pipeline to extract daily sales data, transform it, and load it into a Power BI dashboard for real-time reporting.

Best Practices for Using Azure Data Factory

1. Secure Data Integration

Ensure your data remains secure by following best practices for securing pipelines. ADF supports Managed Identities and Azure Key Vault integration for secure access to resources and credentials.

2. Optimizing Pipeline Performance

Optimize your pipelines by managing Data Movement Units (DMUs) effectively. DMUs control the data movement throughput, and adjusting their number can significantly improve performance.

3. Cost Management in ADF

ADF’s pay-as-you-go pricing can be cost-effective, but make sure to monitor your data transfer costs and choose the right tier for your business needs.

Advanced Features of Azure Data Factory

1. Data Flow Mapping

ADF’s Mapping Data Flows allow you to design complex transformations with an intuitive drag-and-drop interface. This feature is ideal for scenarios where simple transformations won’t suffice.

2. Integration with Azure Synapse

Azure Data Factory integrates seamlessly with Azure Synapse Analytics, giving businesses a complete end-to-end solution for large-scale data processing and analytics.

3. Version Control with Git

You can integrate ADF with Git for version control, allowing your team to collaborate on pipelines, track changes, and deploy them to different environments.

Conclusion: Why Azure Data Factory is Essential for Modern Data Integration

Azure Data Factory simplifies the process of ingesting, transforming, and loading data across cloud and on-premise environments. Its user-friendly interface, scalability, and integration with various Azure services make it a must-have tool for any organization seeking to streamline its data operations. By following best practices and leveraging ADF’s advanced features, you can transform how your business handles data.

Ready to Transform Your Data Integration Process?

Unlock the power of Azure Data Factory and streamline your data workflows, from cloud to on-premise integration. Whether you're migrating data, building data lakes, or automating complex ETL pipelines, Azure Data Factory can help you scale faster and smarter. Want to learn how to get started or optimize your current setup?

Contact us today for expert guidance on mastering Azure Data Factory for your business!

Ratheesh Kumar

Certified Cloud Architect & DevOps Expert

Comments